Select the language you need from the flag button at the top of the blog.

All content on this blog can be translated using the translation function.

The original of this article is here.

#Looking at Together_Good Lecture

This time, as promised last time, using Python

Let's do the most basic text crawl.

Previously, the meaning of the crawling was made with high difficulty, but

Still, I'd like to proceed with posting for organizational purposes.

It also seems cleaner to do it in Python.

Below is a text crawl in JavaScript.

https://sangminem.tistory.com/28

Let's implement a program that performs the same function in Python.

First, import the necessary modules.

import requests

import re

import os

from bs4 import BeautifulSoup

If you don't have the requests module and bs4 package, you need to install it.

pip install requests

pip install bs4Enter as above to install.

The requests module helps you easily process HTTP requests

The BeautifulSoup module in the bs4 package is used for HTML DOM data.

It has a function that makes it easy to extract the desired content.

The re module was imported to remove special characters, and the os module was imported to check whether a folder exists when creating a folder.

Next, let's get the url information from the file contents.

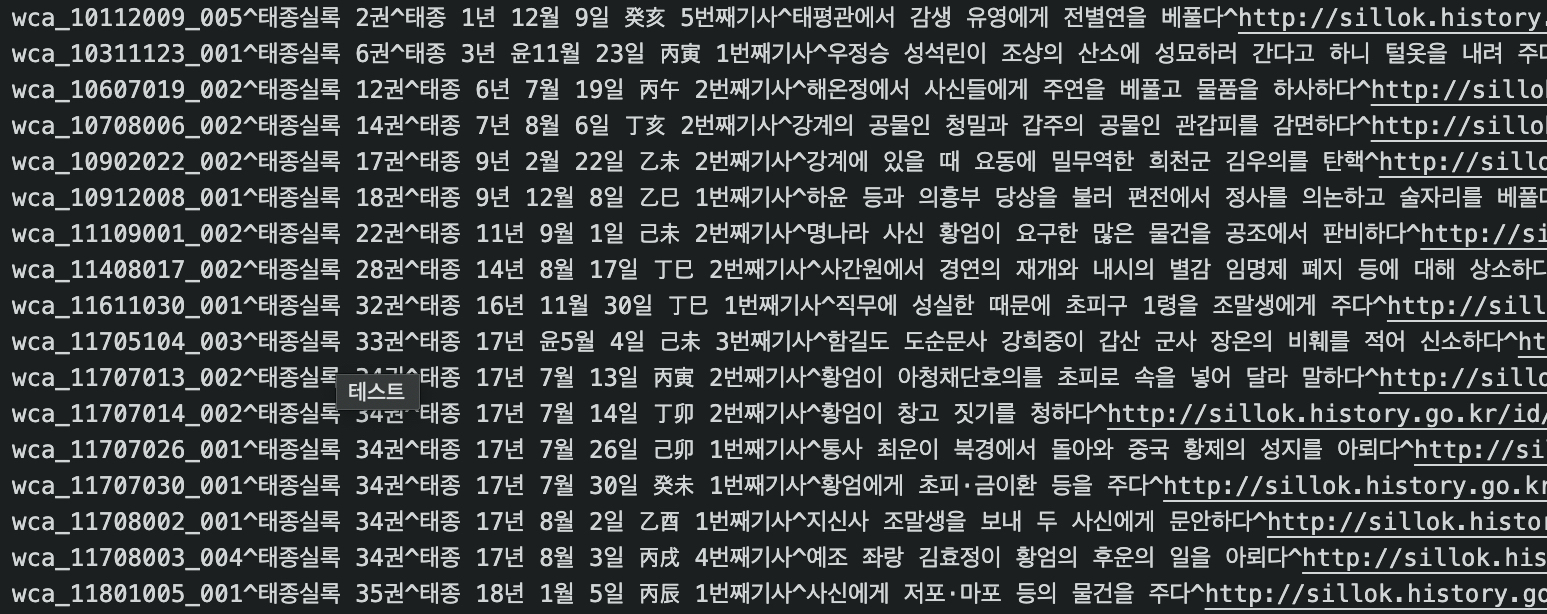

The file contents are as above.

Each line has URL information at the end.

Here is the code:

r = open('sample.txt', mode='rt', encoding='utf-8')

url_list = []

for line in r:

temp = line.split('^')

url = re.sub('\n','',temp[len(temp)-1])

url_list.append(url)

r.close()Data is fetched by line, divided by'^', and only url information is stored in the url_list array.

And by closing the file, it was released from memory.

Next, I will request url information one by one and extract the necessary information.

all_contents = "Location"+"\t"+"Date"+"\t"+"Title"+"\t"+"Trans."+"\t"+"Origin.\r\n"

for url in url_list:

res = requests.get(url)

soup = BeautifulSoup(res.content, 'html.parser')

tit_loc = soup.select('.tit_loc')[0]

date_text = ''

for span in tit_loc.findAll('span'):

date_text = re.sub('[\n\r\t]','',span.get_text().strip())

span.extract()

loc_text = re.sub('[\n\r\t]','',tit_loc.get_text().strip())First, I entered the distinction value of each data by separating it with'\t' on the first line.

Then, url is called one after the other to bring it to the web page and put it in the res variable.

We made it easy to parse the html imported using the BeautifulSoup module and put it in a soup variable.

With the select method, I got the location and date and put it in the tit_loc variable.

The date was extracted directly from the child node span, the imported data.

The location is the part of the tit_loc class excluding the span tag, the child node

After removing the span tag through the extract method, extraction was performed.

Next, we will extract the title, translation, and original text.

for url in url_list:

res = requests.get(url)

soup = BeautifulSoup(res.content, 'html.parser')

tit_loc = soup.select('.tit_loc')[0]

date_text = ''

for span in tit_loc.findAll('span'):

date_text = re.sub('[\n\r\t]','',span.get_text().strip())

span.extract()

loc_text = re.sub('[\n\r\t]','',tit_loc.get_text().strip())

# Coding additional

title = soup.select('h3.search_tit')[0]

title_text = re.sub('[\n\r\t]','',title.get_text().strip())

contents = soup.select('div.ins_view_pd')

content_text = []

for content in contents:

temp = ''

for paragraph in content.findAll('p','paragraph'):

for ul in paragraph.findAll('ul'):

ul.extract()

temp += paragraph.get_text()

content_text.append(re.sub('[\n\r\t]','',temp.strip()))

temp = ''

all_contents += loc_text+'\t'+date_text+'\t'+title_text+'\t'+content_text[0]+'\t'+content_text[1]+'\r\n'

file = open('result.txt','wb')

file.write(all_contents.encode('utf-8'))

file.close()The title was extracted immediately after confirming that the search_tit class of the h3 tag was used.

The tags and classes containing the translated text and the original text are the same, so the two are put in an array at the same time.

I fetched a number of p tags in it and the content spanning the paragraph class

The content in the ul tag is unnecessary, so I removed it with the extract method.

As a result, content_text[0] contains the translation and content_text[1] contains the original text.

The data set was completed by concatenating all extracted contents with'\t'.

Call all urls to complete each line and put it in one variable.

Finally, I wrote the contents to the result.txt file.

The execution is as follows:

python crawling_text.pyIt may take some time if there are many cases.

In this way, the data has been refined and stored nicely.

Since each content is separated by tabs, editing using Excel is also easy.

The source certainly looks shorter and cleaner than JavaScript.

As I wrote this, I once again felt how simple and powerful Python features are. :)

댓글